Person Re-identification for Videos

- Algorithm:

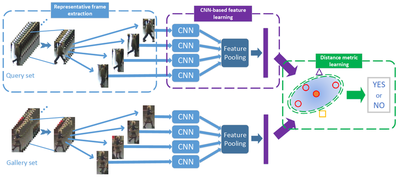

Unlike previous work, in this project, we intend to extract a compact appearance representation from several representative frames rather than the whole frames for video-based re-id. Compared to the temporal-based methods, the proposed appearance model works more similarly to the human visual system.

As shown in the above figure, given a walking sequence, we first split it into a couple of segments corresponding to different action primitives of a walking cycle. The most representative frames are selected from a walking cycle by exploiting the local maxima and minima of the Flow Energy Prole (FEP) signal. For each frame, we propose a CNN to learn features based on a person’s joint appearance information. Since different frames may have different discriminative features for recognition, by introducing an appearance-pooling layer, the salient appearance features of multiple frames are preserved to form a discriminative feature descriptor for the whole video sequence.

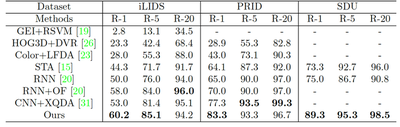

- Experiment Results: