Adversarial Attack on Object Detection

Unlike previous adversarial attack works, in this project, we intend to attack object detectors’ performance by learning a perturbation on the contextual object of the input image.

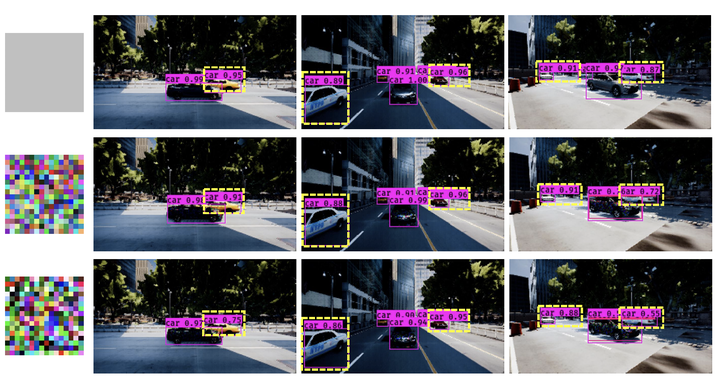

Our approach hinges on an evolution search strategy for the optimization of the non-differentiable objective function, which involves a complex 3D environmental simulation when mapping from an input (a camouflage pattern) to an output (the object detection accuracy). Given a batch of vehicles and a collection of simulated environments, our goal is to generate a camouflage for one of the vehicles and influence the detectors’ performance on the other unpainted vehicles in those environments. Instead of placing the patch in a random location within the image, we directly change the appearance of the object to investigate the effect on other object detection performance, which could be more powerful and general for different scenarios.

- Algorithm

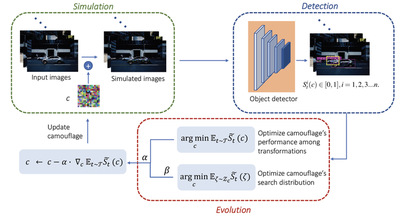

The framework of the proposed algorithm is shown in the above figure. There are mainly three phases in our algorithm, including simulation, detection, and evolution. Let c denote a vehicle camouflage pattern represented by an RGB image. When c has been painted onto the surface of a vehicle in a tiled style, state-of-the-art object detectors would completely or partially fail to detect/classify the vehicle regardless of observation angles, distances and environments. In this context, we aim to determine whether it is possible to create a camouflage pattern c, being painted on a given vehicle, could mislead detectors to make incorrect detection/classification on the other vehicles (without camouflage) in the same scene. After inputting the simulated images into detectors and outputting the detection results, we conduct the optimization of the proposed camouflage from two aspects, namely the attack performance of the camouflage on detectors and search distribution of the camouflage.

-

Experiment Results

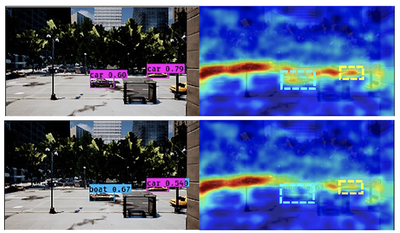

Grad-CAM of YOLO_v3 on images with random camouflage and CCA camouflage.

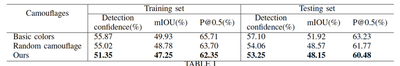

CCA performance on YOLO_v3.